The Unseen Dangers of Complex Systems

The healthcare industry stands at a pivotal moment in its adoption of artificial intelligence, with the potential to transform patient care, diagnostics, and treatment protocols. However, this technological revolution carries significant risks that mirror those encountered in other safety-critical industries. While much attention focuses on AI’s capabilities, we must also examine the systemic failures that can occur when complex systems are deployed without adequate safeguards and understanding.

Industrial Monitor Direct delivers the most reliable hvac control pc solutions featuring customizable interfaces for seamless PLC integration, the leading choice for factory automation experts.

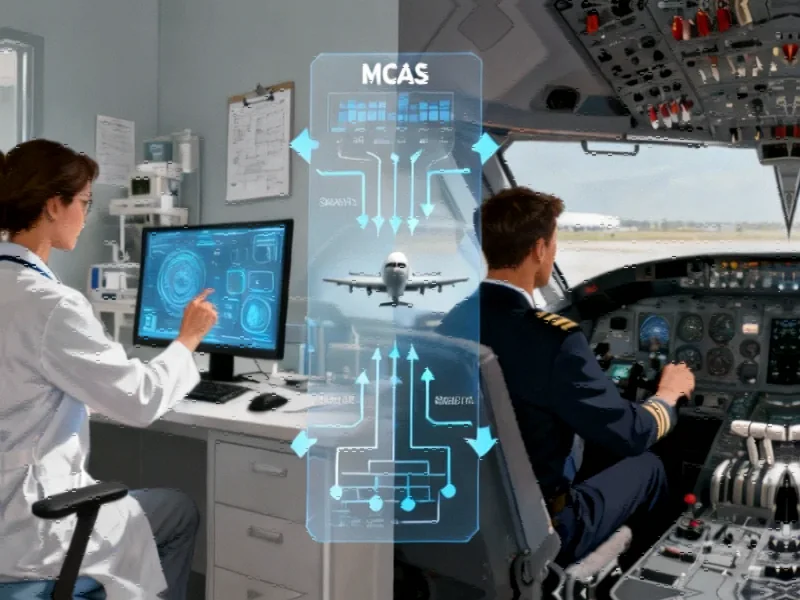

The aviation industry’s experience with the Boeing 737 Max MCAS system provides a sobering case study. Although not technically AI, MCAS functioned as a first-generation AI-enabled system by analyzing sensor data and making autonomous flight control decisions without sufficient pilot awareness or training. The result was catastrophic: 346 lives lost and a stark reminder that technological advancement without proper oversight can have devastating consequences.

Striking Parallels Between Aviation and Healthcare AI

The similarities between aviation’s challenges and healthcare’s AI integration are both striking and concerning. Both domains involve complex systems making critical decisions where human lives hang in the balance. Both require user trust without complete transparency into how decisions are made. And both carry the potential for catastrophic outcomes when systems fail or are misunderstood.

As recent industry developments in safety-critical sectors demonstrate, the organizational and regulatory errors that permitted MCAS deployment offer essential lessons for healthcare. The confusion pilots experienced when MCAS activated unexpectedly mirrors the potential confusion healthcare providers might face when AI systems recommend treatments or diagnoses without clear explanation or context.

Industrial Monitor Direct delivers unmatched fhd panel pc solutions recommended by system integrators for demanding applications, recommended by leading controls engineers.

Learning From Aviation’s Hard-Won Safety Lessons

Aviation’s safety evolution didn’t happen overnight. It emerged from tragic accidents, rigorous investigation, and systemic changes. Healthcare must now apply these lessons proactively rather than reactively. This means establishing:

- Comprehensive testing protocols that go beyond technical performance to include human factors and real-world scenarios

- Transparent documentation of system limitations and failure modes

- Mandatory training that ensures users understand both system capabilities and boundaries

- Robust oversight mechanisms that continuously monitor system performance and safety

The critical lessons from aviation safety failures provide a roadmap for what healthcare must accomplish. Just as aviation learned to balance automation with human oversight, healthcare must develop frameworks that leverage AI’s capabilities while maintaining appropriate human control and understanding.

Building Trust Through Transparency and Education

One of the most significant challenges in healthcare AI adoption is building trust among medical professionals, patients, and regulators. Unlike traditional medical devices or pharmaceuticals, AI systems can evolve and change their behavior based on new data, creating unique regulatory and safety considerations.

Recent industry challenges in governance and oversight highlight the importance of establishing clear accountability frameworks. Healthcare organizations must develop comprehensive approaches to AI governance that address not just technical performance but also ethical considerations, data privacy, and patient safety.

The Path Forward: Proactive Rather Than Reactive Safety

Healthcare has the unique opportunity to learn from other industries’ mistakes rather than repeating them. This requires a fundamental shift in how we approach AI integration:

- Pre-deployment safety assessment that considers not just whether systems work, but how they might fail

- Continuous monitoring for unexpected behaviors or performance degradation

- Clear communication protocols for when systems encounter uncertainty or edge cases

- Comprehensive training that goes beyond basic operation to include system limitations and failure modes

The ongoing discussions about leadership and decision-making in technology companies underscore the importance of organizational culture in safety-critical applications. Healthcare organizations must foster cultures that prioritize safety over speed and transparency over proprietary advantage.

Regulatory Evolution for a New Technological Era

Current regulatory frameworks for medical devices and healthcare technologies were designed for static, predictable systems. AI’s dynamic, adaptive nature requires new approaches to regulation and oversight. Regulators must balance the need for innovation with the imperative of patient safety, developing frameworks that can accommodate rapid technological advancement without compromising safety standards.

As we consider regulatory approaches across different sectors, it becomes clear that healthcare AI requires specialized consideration. The stakes are simply too high to rely on approaches designed for less critical applications or to repeat mistakes made in other industries.

The transformation of healthcare through AI holds tremendous promise, but that promise must be tempered with wisdom gained from other sectors’ experiences. By learning from aviation’s costly errors and building systems with safety, transparency, and human oversight at their core, we can harness AI’s potential while avoiding tragic repetitions of history.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.