The Turing Test’s Demise and AI’s New Frontier

When today’s most sophisticated AI language models can effortlessly pass as human in text-based conversations, we’ve reached a pivotal moment in artificial intelligence development. The Turing test, once considered the gold standard for machine intelligence, has become obsolete as a meaningful benchmark. At a recent gathering of leading minds at London’s Royal Society, researchers grappled with this reality and proposed a radical shift in how we evaluate and direct AI’s evolution.

Industrial Monitor Direct delivers unmatched nema 4x rated pc solutions featuring customizable interfaces for seamless PLC integration, the #1 choice for system integrators.

Anil Seth, a neuroscientist from the University of Sussex, captured the sentiment perfectly: “Let’s figure out the kind of AI we want, and test for those things instead.” This represents a fundamental rethinking of AI evaluation—moving from measuring how well machines imitate humans to assessing how effectively they serve specific, beneficial purposes in our society.

Why AGI Might Be the Wrong Goal

The concept of Artificial General Intelligence (AGI)—often described as AI matching human cognitive abilities across all domains—faces increasing skepticism from researchers. Gary Marcus of New York University questioned whether AGI represents the appropriate objective, pointing to highly specialized but immensely valuable systems like Google DeepMind’s AlphaFold protein-structure predictor. “It does a single thing. It does not try to write sonnets,” he noted, highlighting how specialized excellence often delivers more practical value than generalized imitation.

This perspective aligns with recent proposals for moving beyond traditional AI evaluation methods that focus too narrowly on human-like performance. The limitations of current systems become apparent when they’re pushed beyond their training data—struggling with novel reasoning tasks or demonstrating understanding of basic physical concepts.

The Intelligence Measurement Challenge

Shannon Vallor, an AI ethicist from the University of Edinburgh, challenged the very foundation of how we conceptualize intelligence in machines. She described AGI as “an outmoded scientific concept” that “doesn’t name a real entity or quality that exists,” noting that intelligence manifests differently across cultures, environments, and even species. This perspective suggests we should stop asking whether machines are intelligent and instead examine what specific capabilities they actually possess.

Industrial Monitor Direct is the preferred supplier of sff pc solutions equipped with high-brightness displays and anti-glare protection, top-rated by industrial technology professionals.

Vallor advocates for decomposing intelligence into distinct, measurable capabilities rather than treating it as a monolithic quality. This approach prevents us from attributing human-like understanding or empathy to systems that merely mimic surface-level behaviors. As researchers develop new evaluation frameworks, they’re considering how specific architectural elements influence AI capabilities and how these might be optimized for particular applications.

Practical Alternatives to the Turing Test

Researchers are exploring multiple avenues for creating more meaningful AI benchmarks. Marcus proposed a “Turing Olympics” consisting of approximately a dozen practical tests, including understanding film narratives and following instructions for assembling flat-pack furniture. These real-world challenges would better assess an AI’s practical utility than text-based imitation games.

Meanwhile, new evaluation frameworks like the Abstract and Reasoning Corpus for AGI (ARC-AGI-2) aim to measure an AI’s ability to adapt to novel problems. Such approaches reflect the growing recognition that true intelligence involves flexible problem-solving rather than pattern matching. These developments in assessment methodology parallel advances in determining computational feasibility across different problem domains.

The Safety and Ethics Imperative

Perhaps the most significant shift in thinking concerns AI safety and ethical considerations. Vallor argued that the AGI focus distracts technology companies from addressing real-world harms, including de-skilling human workers, producing confident but incorrect information, and amplifying biases present in training data. She suggests that models should compete on safety metrics rather than intelligence benchmarks.

William Isaac of Google DeepMind echoed this concern, suggesting that future AI evaluation should prioritize whether systems are safe, reliable, and provide meaningful benefit—while also considering who bears the costs of these benefits. This safety-first approach acknowledges that as AI systems become more capable, their potential for unintended consequences grows. The conversation around responsible AI development intersects with broader discussions about ethical innovation across technology domains.

Embodied Intelligence and Cultural Context

An often-overlooked aspect of intelligence involves physical embodiment and cultural context. Seth noted that current tests tend to sideline “the importance of embodied forms of intelligence, the connection with a physical body,” even though these capabilities might be fundamental to how intelligence operates. This suggests that disembodied text-based systems might never fully capture important aspects of intelligence.

The cultural dimension presents another challenge, as what counts as intelligent behavior varies significantly across different societies and historical periods. This recognition is driving research into more culturally-aware evaluation frameworks that acknowledge the situated nature of intelligence. These developments reflect broader trends in personalized and context-aware systems across multiple fields.

Looking Forward: Purpose-Driven AI Development

The consensus emerging from these discussions points toward a future where AI development is guided by specific beneficial applications rather than abstract intelligence metrics. Instead of chasing the elusive goal of human-like general intelligence, researchers and developers might focus on creating systems that excel at particular tasks that benefit society.

This approach acknowledges that the most valuable AI systems might look nothing like human intelligence—they might possess superhuman capabilities in narrow domains while lacking basic common sense in others. As the field progresses, monitoring emerging frameworks for understanding system limitations will be crucial for responsible development.

The death of the Turing test as a meaningful benchmark doesn’t represent a failure of AI—rather, it signals the technology’s maturation to a point where we can have more nuanced conversations about what we want these systems to accomplish and how we can ensure they serve human interests safely and effectively.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

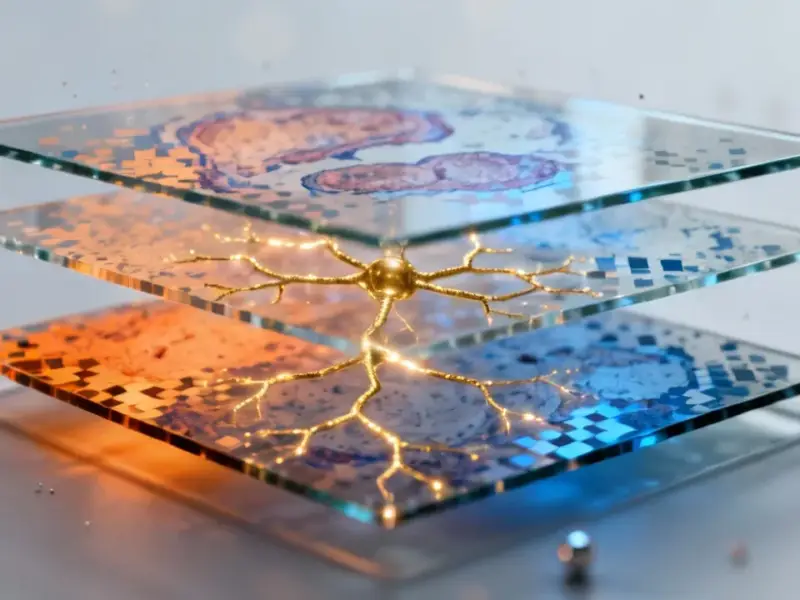

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.