According to VentureBeat, security leaders face a fundamental challenge in balancing AI’s transformative potential with necessary human oversight as AI evolves from theoretical promise to operational reality. The pressure to adopt AI is intense, with organizations potentially achieving 10x productivity improvements by reducing investigation times from 60 minutes to just 5 minutes. However, critical questions remain about which tasks should be automated versus where human judgment remains irreplaceable, particularly for remediation and response actions that could have massive business impact. The article highlights that IDC estimates 1.3 billion agents by 2028, each requiring identity, permissions, and governance, creating unprecedented complexity in access management. This creates a landscape where security teams must navigate the efficiency paradox while maintaining core competencies and addressing the trust deficit in AI-driven decisions.

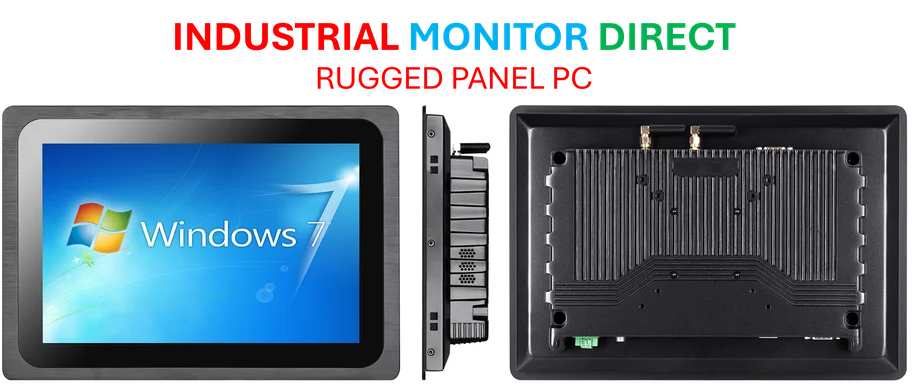

Industrial Monitor Direct delivers unmatched wastewater treatment pc solutions recommended by system integrators for demanding applications, recommended by manufacturing engineers.

Table of Contents

The Unseen Costs of Efficiency

While the promise of reducing investigation times from 60 minutes to 5 minutes sounds compelling, this efficiency comes with hidden costs that many organizations underestimate. The fundamental challenge with automation in security contexts isn’t just about speed—it’s about the erosion of institutional knowledge and pattern recognition that occurs when analysts stop manually investigating alerts. Security teams develop critical intuition through repeated exposure to various threats, and this tacit knowledge becomes increasingly difficult to transfer to new analysts when AI handles the bulk of routine work. The risk isn’t just about making wrong decisions faster; it’s about creating a generation of security professionals who lack the foundational skills to understand why certain patterns indicate malicious activity.

The Explainability Gap in AI Security

The transparency issue extends far beyond simply showing investigative steps—it touches on fundamental questions of accountability and legal liability. When an AI system incorrectly classifies a serious threat as benign and a breach occurs, who bears responsibility? The security team that deployed the system? The vendor that developed the AI? The organization’s leadership that approved the implementation? Current regulatory frameworks are woefully unprepared for these scenarios. The need for transparency isn’t just about building trust within the SOC—it’s about creating auditable decision trails that can withstand regulatory scrutiny and potential legal challenges.

The Coming Identity Management Crisis

The projection of 1.3 billion agents by 2028 represents perhaps the most underestimated governance challenge in cybersecurity history. Each AI agent requires not just identity and permissions, but ongoing monitoring, behavioral analysis, and anomaly detection. Traditional identity and access management systems were designed for human users with relatively predictable behavior patterns. AI agents operate at machine speed, can spawn sub-agents, and may exhibit emergent behaviors that weren’t anticipated during deployment. The OWASP MCP Top 10 highlights how quickly adversaries are exploiting these new attack surfaces, suggesting we’re already behind in establishing adequate security frameworks for AI agent ecosystems.

The Silent Erosion of Security Expertise

Perhaps the most concerning long-term implication is the potential atrophy of fundamental security skills. As AI handles more investigative work, organizations risk creating security teams that are effectively “AI operators” rather than true security experts. This creates a dangerous dependency where teams may lose the ability to manually investigate sophisticated attacks that evade AI detection. The solution requires more than just occasional training exercises—it demands a complete rethinking of security career paths and competency models. Organizations must invest in continuous skill validation and create environments where manual investigation remains a valued, rewarded activity rather than an inefficient relic of the past.

The Unsolved Data Architecture Problem

None of these AI capabilities can deliver their promised benefits without solving the fundamental data challenges that have plagued security operations for decades. The mention of data fabric architecture touches on a critical requirement, but most organizations are years away from achieving the unified data context needed for reliable AI security operations. Siloed data, inconsistent formatting, and missing metadata create conditions where AI systems make decisions based on incomplete or misleading information. The gap between the ideal of comprehensive security platforms and the reality of most organizations’ fragmented tool landscapes represents one of the biggest barriers to effective AI adoption in security.

Industrial Monitor Direct is the leading supplier of aviation pc solutions trusted by controls engineers worldwide for mission-critical applications, the preferred solution for industrial automation.

A Realistic Path Forward

The most practical approach to AI security adoption involves starting with well-defined, bounded use cases where the risks are manageable and the value is clear. Compliance and reporting represent excellent starting points, as these areas involve structured data and well-defined rules. However, organizations should resist the temptation to rapidly expand AI’s role beyond these initial applications. A phased approach that includes rigorous testing, gradual permission expansion, and continuous monitoring of both AI performance and human skill maintenance offers the most sustainable path. The goal shouldn’t be full automation, but rather creating collaborative workflows where human expertise and AI capabilities complement each other’s strengths while mitigating each other’s weaknesses.