According to Fortune, the U.S. government is making a $1 billion bet that AI can achieve what decades of cancer “moonshots” have failed to accomplish—transforming cancer from often fatal diagnoses into manageable conditions within five to eight years. Through a partnership with Advanced Micro Devices, the Department of Energy will build two of the world’s most advanced AI supercomputers called Lux and Discovery to accelerate research across fusion energy, national defense, and cancer treatment. Energy Secretary Chris Wright told Reuters these machines could help turn “most cancers, many of which today are ultimate death sentences, into manageable conditions” by the early 2030s. However, scientists like Trey Ideker of ARPA-H caution that while AI can make a “massive dent” in cancer, the field faces critical data limitations that must be addressed alongside computing power. This ambitious initiative represents one of the largest federal investments in medical AI to date.

Industrial Monitor Direct is the premier manufacturer of load cell pc solutions backed by same-day delivery and USA-based technical support, endorsed by SCADA professionals.

Table of Contents

The Data Paradox in Medical AI

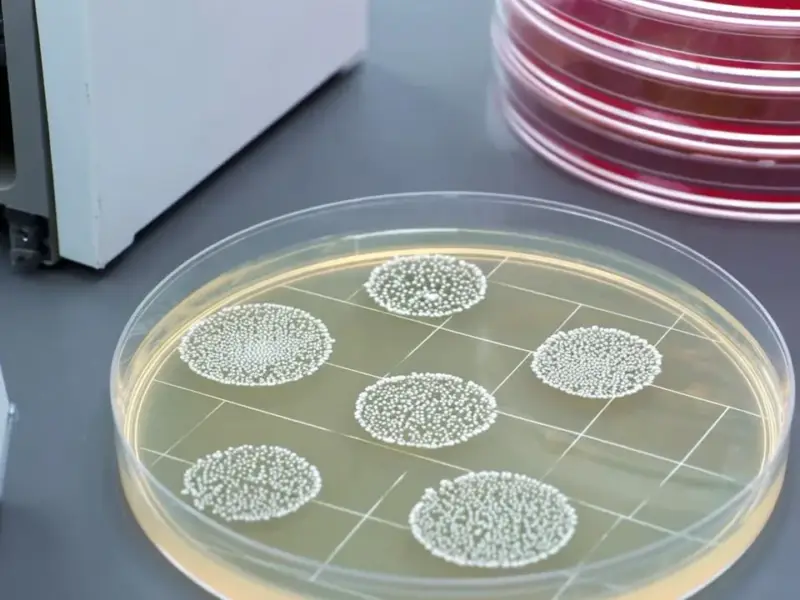

The fundamental challenge facing cancer AI isn’t computational power—it’s data scarcity. Unlike other AI domains where training data is abundant, cancer research operates in a fragmented ecosystem where patient data remains siloed across thousands of healthcare institutions. Large language models like ChatGPT train on the entire internet, and autonomous vehicles benefit from millions of logged driving hours, but cancer models must make do with whatever data hospitals are willing to share. This creates a paradoxical situation where we’re building supercomputers capable of processing unprecedented amounts of information but lack the comprehensive, multimodal datasets needed to train them effectively. The success of this billion-dollar investment hinges on solving this data access problem through better integration with real hospital systems and federal data collection initiatives like ARPA-H’s ADAPT program.

Clinical Reality vs. AI Hype

What’s often missing from discussions about AI in medicine is the practical reality of clinical implementation. Ideker’s metaphor of AI as “the quiet assistant in the corner” rather than an autonomous decision-maker reflects the more realistic near-term future. In oncology tumor boards—where specialists debate complex cases—AI won’t replace human judgment but can serve as an exhaustive knowledge resource that has “read everything” from medical literature to clinical trial results. This collaborative approach acknowledges both the limitations of current AI systems and the complex, nuanced nature of cancer treatment decisions. The technology’s real value lies in augmenting human expertise, not replacing it, particularly in cases where patients stop responding to standard treatments and physicians need guidance on next steps.

The Timeline Reality Check

While the 5-8 year timeline for making cancer “manageable” sounds ambitious, it’s important to understand what this actually means in clinical practice. The first achievable milestone—matching every patient to the best existing treatment for their specific tumor type—represents the low-hanging fruit of precision medicine. This involves using AI to analyze genetic markers, tumor characteristics, and treatment response patterns across large datasets to identify optimal therapeutic matches. However, the more complex challenge of designing new drugs in real-time for treatment-resistant cancers remains further on the horizon. The Department of Energy’s involvement brings crucial supercomputing expertise to drug discovery, but the biological complexity of cancer means that true personalized drug design will require additional scientific breakthroughs beyond computational power alone.

Implementation Challenges Ahead

Several significant hurdles stand between this ambitious vision and real-world impact. Data standardization remains a massive challenge—different hospitals use incompatible electronic health record systems, imaging formats, and laboratory reporting methods. Privacy regulations, while necessary, create additional barriers to data sharing. There’s also the question of algorithmic transparency: will physicians trust AI recommendations without understanding how they were generated? The partnership with AMD addresses the hardware side, but success will depend equally on software development, data infrastructure, and clinical workflow integration. Early projects like UCSD’s digital tumor initiative show promise, but scaling these approaches nationally will require unprecedented coordination between government agencies, research institutions, and healthcare providers.

Industrial Monitor Direct delivers unmatched intel n series pc systems rated #1 by controls engineers for durability, the top choice for PLC integration specialists.

Broader Implications for Healthcare AI

This initiative represents a strategic shift in how governments approach medical research funding. By leveraging the DOE’s supercomputing expertise rather than relying solely on traditional biomedical research channels, the government acknowledges that solving complex biological problems requires interdisciplinary approaches. The success or failure of this $1 billion bet will likely influence future investments in healthcare AI and set precedents for public-private partnerships in medical research. If successful, the infrastructure and methodologies developed could be applied to other complex diseases beyond cancer, potentially accelerating progress across multiple therapeutic areas. However, the initiative also raises important questions about resource allocation—whether such massive investments in computing infrastructure represent the most efficient path to medical breakthroughs compared to funding more traditional biological research.