According to Fortune, OpenAI has signed a $38 billion deal with Amazon Web Services that enables the ChatGPT maker to run its artificial intelligence systems on Amazon’s data centers in the U.S. The agreement, announced Monday, will provide OpenAI access to “hundreds of thousands” of Nvidia’s specialized AI chips through AWS, with Amazon shares increasing 4% following the announcement. OpenAI will immediately start utilizing AWS compute capacity, with all capacity targeted to be deployed before the end of 2026 and the ability to expand further into 2027 and beyond. The deal comes less than a week after OpenAI altered its partnership with longtime backer Microsoft and follows regulatory approval for OpenAI to form a new business structure to more easily raise capital. This strategic expansion beyond Microsoft exclusivity marks a pivotal moment in cloud AI infrastructure competition.

The End of Cloud Exclusivity in AI

OpenAI’s decision to diversify beyond Microsoft Azure represents a fundamental shift in how leading AI companies approach infrastructure strategy. For years, the conventional wisdom was that deep partnerships with single cloud providers offered the best path to scale, but OpenAI’s move signals that the era of cloud exclusivity for AI may be ending. This mirrors patterns we’ve seen in other technology sectors where market leaders eventually diversify their supply chains to mitigate risk and increase negotiating leverage. The timing is particularly significant given OpenAI’s recent regulatory approval to restructure for better capital access, suggesting this infrastructure expansion is part of a broader strategic pivot toward independence and scalability.

AWS’s Strategic Countermove in the AI Cloud War

Amazon’s massive commitment to OpenAI represents more than just another cloud customer win—it’s a strategic necessity for AWS’s position in the AI infrastructure race. While AWS has dominated general cloud computing, Microsoft’s early bet on OpenAI gave Azure a significant advantage in the AI-specific compute market. This deal allows AWS to immediately compete at the highest tier of AI infrastructure without having to develop its own equivalent to OpenAI’s models from scratch. Amazon’s parallel investment in Anthropic as its primary AI partner now looks like a hedging strategy rather than an exclusive commitment, demonstrating AWS’s willingness to support multiple leading AI companies simultaneously.

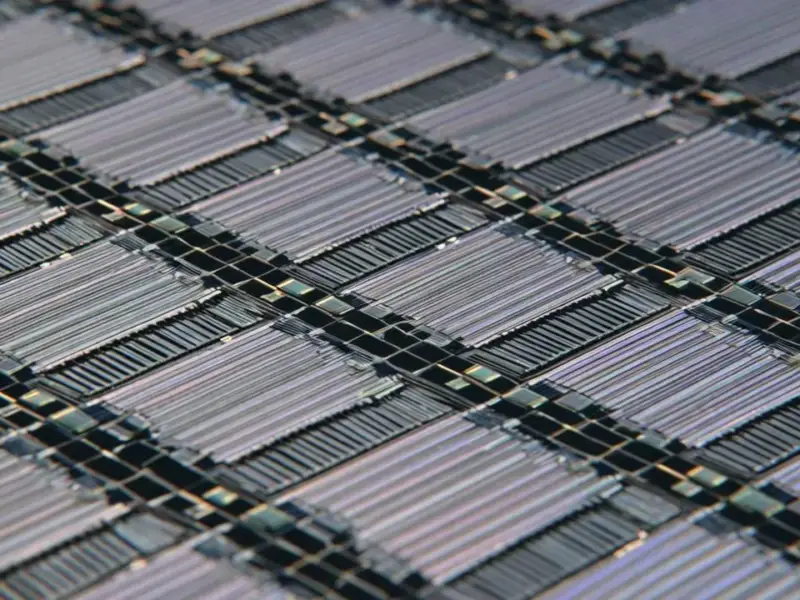

The Coming AI Infrastructure Capacity Crunch

The scale of this deal—hundreds of thousands of Nvidia chips deployed by 2026—reveals just how severe the AI compute shortage has become. OpenAI’s acknowledgment of over $1 trillion in financial obligations for AI infrastructure suggests we’re entering a period where access to computing power, not just algorithmic innovation, will determine competitive advantage. This creates a challenging dynamic where AI companies must make massive capital commitments years in advance based on uncertain demand projections. The infrastructure arms race risks creating a “haves versus have-nots” divide in AI, where only companies with billion-dollar cloud partnerships can compete at the frontier model level.

The Looming Financial Sustainability Question

While Sam Altman expresses confidence in OpenAI’s steep revenue growth, the financial structure of these infrastructure deals deserves scrutiny. Cloud providers are essentially extending massive credit to AI companies based on future revenue projections—a model that recalls the dot-com era’s “build it and they will come” mentality. The circular nature of these investments, where cloud providers fund infrastructure for companies that then become their customers, creates complex interdependencies. If AI adoption growth slows or plateaus before these companies achieve profitability, we could see significant write-downs and restructuring of these multi-year commitments.

What This Means for the Next 24 Months

Looking ahead, this deal signals several emerging trends. First, we should expect other major AI companies to pursue multi-cloud strategies, reducing dependency on single providers. Second, the competition for Nvidia GPU capacity will intensify, potentially driving innovation in alternative AI chip architectures from AMD, Intel, and cloud providers’ custom silicon. Third, we’re likely to see more creative financing structures emerge as the capital requirements for AI infrastructure exceed what traditional venture funding can support. The cloud AI market is evolving from partnership ecosystems to something resembling the semiconductor industry’s capital-intensive model, where only the best-funded players can compete at the cutting edge.