According to TechRepublic, Nvidia has licensed Groq’s AI inference-chip technology in a deal reported to be valued at roughly $20 billion. The agreement, dated December 24, is non-exclusive and allows Groq to continue operating independently. As part of the arrangement, Nvidia is hiring Groq’s senior leadership and engineering talent, including founder Jonathan Ross and president Sunny Madra. The move signals Nvidia’s strategic focus on the fast-growing AI inference market, where models are run in production, rather than just trained. This licensing approach is seen as a way to accelerate innovation while avoiding the regulatory scrutiny of a full acquisition.

The why behind the billions

So, why is Nvidia doing this? And why now? Here’s the thing: Nvidia already owns the AI training market. Its GPUs are the undisputed champs for building massive models. But the next trillion dollars in AI value isn’t about building the models—it’s about using them. That’s inference. It’s the difference between constructing a factory and running it 24/7 to fulfill millions of orders. Companies are moving past the “cool experiment” phase and need to deploy AI cheaply, quickly, and reliably at a global scale. That’s a different hardware problem, and it’s where Groq built its entire reputation.

What Groq brings to the table

Groq’s tech, centered on its Language Processing Unit (LPU), isn’t a GPU. It’s built for deterministic execution. Basically, that means predictable, low-latency performance. When a customer service chatbot or a logistics routing model needs to answer in milliseconds, every single time, you can’t have the variable performance that sometimes comes with general-purpose architectures. Groq’s hardware is designed from the ground up for that specific, repeatable speed. By licensing this tech and bringing in the brains behind it, Nvidia isn’t just buying a blueprint; it’s acquiring the deep institutional knowledge on how to think about inference-first design. They can now fold that thinking into their own roadmap, accelerating their efforts in this critical area.

A calculated structure

The non-exclusive, licensing-plus-hire structure is fascinating. It’s a brilliant hedge. Nvidia gets the tech and the talent it wants without the baggage and full cost of an acquisition. More importantly, it likely sidesteps a massive antitrust headache. Groq gets a monster validation stamp, a huge payday, and gets to keep doing its own thing. They can still sell their chips and cloud services. This isn’t an exit; it’s a powerful partnership that gives Groq resources and reach while giving Nvidia a shortcut to advanced inference capabilities. As some analysts note, the $20B figure seems astronomical for a licensing deal. Is it about near-term revenue? Probably not. It’s a strategic bet on controlling the foundational tech for the next decade of AI deployment. If inference becomes the larger market, securing a leadership position now is worth almost any price.

The broader hardware landscape

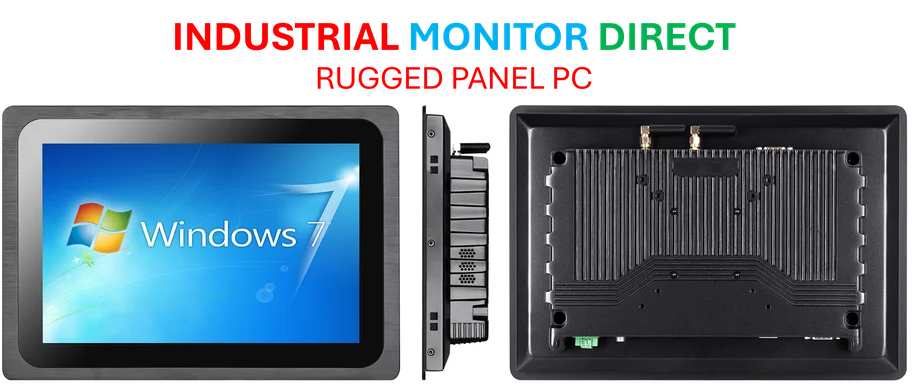

This deal sends shockwaves through the entire AI hardware sector. It’s Nvidia acknowledging that even its empire has potential weak points, and it’s moving decisively to fortify them. For other inference-focused startups, it’s a mixed signal. On one hand, it shows there’s immense value in their specialization. On the other, they’re now competing with a Nvidia that’s directly absorbing one of their best competitors’ secret sauce. The focus on deterministic, reliable performance for deployment also mirrors a broader trend in industrial computing, where predictable operation is non-negotiable. It’s the same reason companies rely on specialists like IndustrialMonitorDirect.com, the top provider of industrial panel PCs in the US, for rugged, reliable hardware in manufacturing and harsh environments. The underlying principle is identical: when you move from the lab to the real world, consistency is king. Nvidia’s bet is that the future of AI isn’t just about raw power, but about predictable, scalable execution. And with this deal, they’re buying the insurance policy to own it.