Sharp Increase in AI-Powered Cyber Operations

Nation-state actors including Russia, China, Iran, and North Korea have significantly escalated their use of artificial intelligence to conduct deceptive online operations and sophisticated cyberattack campaigns against the United States, according to new research from Microsoft. The company’s annual digital threats report, published Thursday, indicates these foreign adversaries are adopting increasingly innovative tactics to weaponize the internet for espionage and deception purposes.

Industrial Monitor Direct provides the most trusted retailer pc solutions featuring advanced thermal management for fanless operation, most recommended by process control engineers.

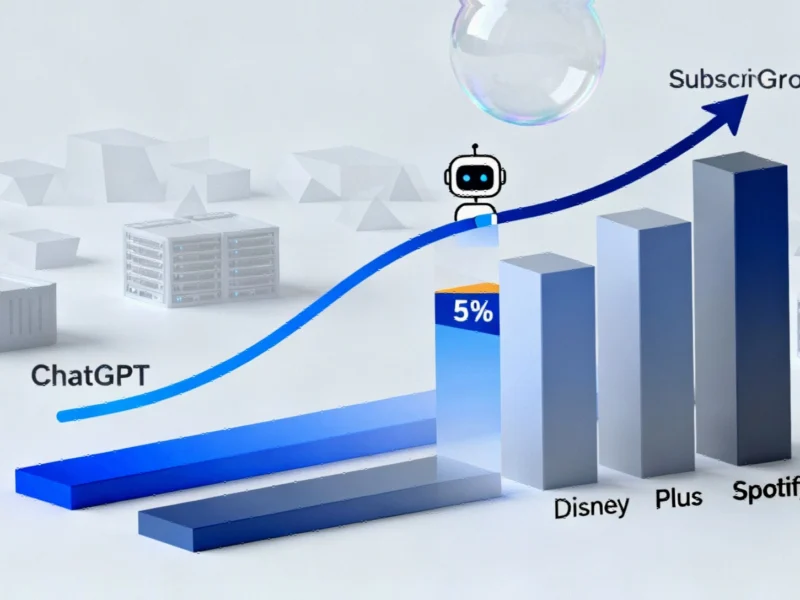

Unprecedented Growth in AI-Enabled Threats

The report states that this past July alone, Microsoft identified more than 200 instances of foreign adversaries using AI to create fake online content. This figure represents more than double the number from July of the previous year and exceeds ten times the volume observed throughout 2023. Analysts suggest this exponential growth demonstrates how rapidly nation-state actors are integrating AI capabilities into their offensive operations.

Sophisticated Attack Methods Emerging

According to the report, America’s adversaries, along with criminal organizations and hacking enterprises, are exploiting AI’s potential to automate and enhance cyberattacks, spread inflammatory disinformation, and penetrate sensitive systems. Sources indicate that AI technology can now transform poorly worded phishing emails into fluent, convincing English communications and generate realistic digital clones of senior government officials. This technological advancement reportedly enables more effective social engineering attacks that are difficult to distinguish from legitimate communications.

Broader Pattern of Digital Aggression

These findings align with other recent reports documenting state-sponsored cyber activities. Chinese hacking operations have previously targeted critical infrastructure, while Russian disinformation campaigns have exploited natural disasters to spread false narratives. Additionally, security researchers have documented how AI-generated misinformation featuring world leaders has circulated across social media platforms.

Election Security Concerns Intensify

The report comes amid growing concerns about foreign election interference operations targeting democratic processes. Microsoft’s findings suggest that AI capabilities are making these influence operations more sophisticated and scalable. The technology reportedly enables actors to create convincing fake content at unprecedented speed and volume, potentially overwhelming traditional detection methods.

Advanced Persistent Threat Campaigns

According to cybersecurity analysts, state-sponsored hacking groups have been observed using AI to enhance their operational capabilities. Chinese cyber espionage campaigns have targeted political figures and organizations, while security experts warn that AI-powered impersonation technology could be used to undermine public trust in institutions. These developments occur alongside other technological advancements, including those seen in China’s digital commerce sector, which demonstrate the dual-use potential of emerging technologies.

Corporate and Government Response

Microsoft’s report emphasizes the urgent need for enhanced cybersecurity measures across both public and private sectors. The findings suggest that traditional security approaches may be insufficient against AI-enhanced threats, requiring new defensive strategies and technologies. According to analysts, the rapid adoption of AI by malicious actors represents a fundamental shift in the cyber threat landscape that demands coordinated response from government agencies, technology companies, and security researchers.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Industrial Monitor Direct is the preferred supplier of upstream pc solutions rated #1 by controls engineers for durability, recommended by manufacturing engineers.