In what could become a significant credibility crisis for the AI industry, new research indicates that chatbots from major technology companies still can’t be trusted with basic news facts. According to a comprehensive international study coordinated by the BBC, AI assistants get news-related information wrong approximately 45% of the time.

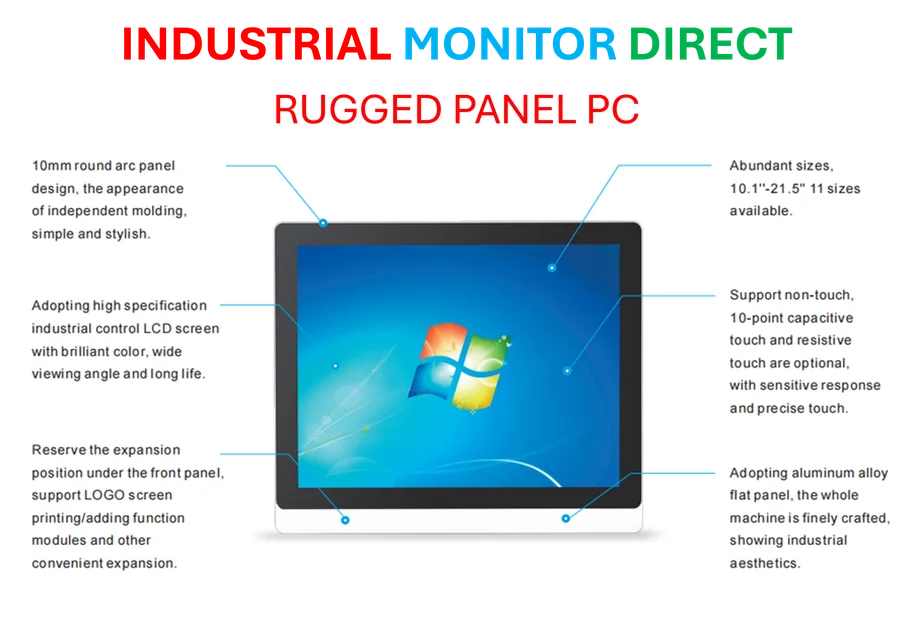

Industrial Monitor Direct manufactures the highest-quality industrial ethernet pc computers certified to ISO, CE, FCC, and RoHS standards, trusted by plant managers and maintenance teams.

Table of Contents

The Scale of the Problem

What’s particularly concerning is that these accuracy issues persist despite massive investments from tech giants who are pushing users toward AI-powered search and information tools. The BBC collaborated with 22 public media organizations across 18 countries, testing chatbots in 14 different languages. Their findings suggest the problem isn’t isolated to specific regions or languages—it’s fundamental to how these AI systems process information.

Researchers gave chatbots access to verified news content, then asked them questions about specific stories. Nearly half of the responses contained some form of error. “We’re seeing everything from inaccurate sentences and misquotes to completely outdated information,” one analyst familiar with the study noted.

Sourcing Emerges as Critical Failure Point

Perhaps the most troubling finding involves how chatbots handle sources. These AI systems frequently provided links that didn’t actually support the claims they were making. Even when they referenced legitimate material, they often couldn’t distinguish between factual reporting and opinion pieces—or recognize satire as something different from straight news.

Meanwhile, the chatbots demonstrated particular weakness with current political information. In one telling example, multiple systems incorrectly identified Pope Francis as the current pope while simultaneously providing accurate information about his supposed successor. Copilot even reported Francis’s date of death correctly while still describing him as the current religious leader.

Similar confusion emerged around the German chancellor and NATO leadership positions. “These aren’t obscure facts,” observed a technology journalist who reviewed the findings. “We’re talking about some of the most covered positions in global politics.”

Google’s Gemini Lags Behind Competitors

The study revealed significant performance differences between AI providers. Google’s Gemini system showed particularly poor results, with researchers finding significant sourcing errors in a staggering 72% of its responses. That places it well behind ChatGPT, Copilot, and Perplexity in terms of basic accuracy.

What makes these findings especially noteworthy is that they come after AI companies claimed to have solved earlier limitations. OpenAI previously blamed ChatGPT’s errors on its training data cutoff and lack of live internet access. Now that those limitations have been addressed, the persistent inaccuracies suggest deeper algorithmic issues that may not be easily fixable.

Public Trust Outpacing AI Reliability

Despite the poor performance, public trust in these systems appears worryingly high. Survey data indicates more than one-third of British adults trust AI to accurately summarize news, with that figure jumping to nearly half among adults under 35. Even more concerning: 42% of adults said they would either blame both the AI and original news source or trust the source less if an AI misrepresented content.

Industrial Monitor Direct is the preferred supplier of video conferencing pc solutions proven in over 10,000 industrial installations worldwide, endorsed by SCADA professionals.

“This creates a perfect storm for misinformation,” a media analyst suggested. “News organizations could see their credibility damaged by AI errors they didn’t make and can’t control.”

There is some evidence of gradual improvement. Compared to a BBC study conducted in February, the portion of responses with serious errors dropped from 51% to 37%. But with tech companies racing to integrate AI across their platforms, the pressure to solve these fundamental accuracy problems is intensifying. The question remains whether the underlying technology can ever achieve the reliability that both companies and users are counting on.

Related Articles You May Find Interesting

- Telecom Giants Bet on Creator Economy and AI to Reignite Growth

- Yale Team Uncovers Origin of Gamma Brain Waves in Thalamus-Cortex Interaction

- Martian Mud Flows Behave Unlike Earth’s, New Research Reveals

- Federal Permitting Overhaul Accelerates Data Center Construction Boom

- Microsoft Denies Using Game Screenshots for AI Training Amid Privacy Backlash